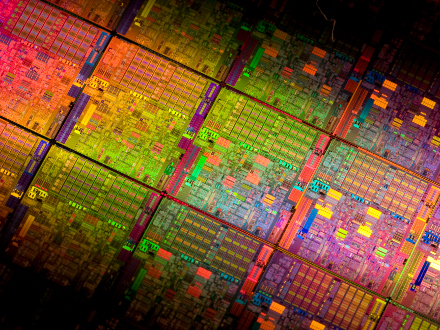

In 2017, NVIDIA released the Deep Learning Accelerator (NVDLA), a free and open-source technology designed for embedded and IoT applications. Leveraging the scalability and configurability, NVDLA is integrated into the latest NVIDIA Jetson AGX Orin module that is priced at $1990. In the latest news found in the Linux Kernel mailing list archive, NVIDIA’s Deep Learning Accelerator is proposed to merge driver code into the Linux Kernel for mainline support. The proposal is still in the development phase and would take a while for open-source developers to make substantial contributions.

NVIDIA’s NVDLA comes as a set of IP-core models based on an open standard technology: Verilog model and simulation model in the RTL form, and TLM SystemC simulation model for software development, testing, and system integration. The software stack includes developed infrastructure to convert the existing deep learning models to a form that can be used by the on-device software. This open-source NVDLA hardware and software is made available under the NVIDIA Open NVDLA license and can be accessed via the GitHub repository.

There has been a lot of development around NVIDIA Deep Learning Accelerator technology since its introduction, and back in 2018, SiFive announced the first open-source RISC-V-based SoC platform integrated with the NVDLA. At the Hot Chips conference, SiFive demonstrated the NVDLA running on an FPGA connected via ChipLink to SiFive’s HiFive Unleashed board built around the Freedom U540 Linux-capable RISC-V processor for edge applications.

“NVIDIA open-sourced its NVDLA architecture to drive the adoption of AI,” said Deepu Talla, vice president and general manager of Autonomous Machines at NVIDIA. “Our collaboration with SiFive enables customized AI silicon solutions for emerging applications and markets where the combination of RISC-V and NVDLA will be very attractive.”

Another recent development with NVDLA into a RISC-V-based platform comes from a research article that evaluated the performance of NVDLA by running the YOLOv3 object-detection algorithms.

“We further analyze the performance by showing that sharing the last-level cache with NVDLA can result in up to 1.56x speedup. We then identify that sharing the memory system with the accelerator can result in unpredictable execution time for the real-time tasks running on this platform.”

For more information, head to the Linux Kernel mailing list archive.