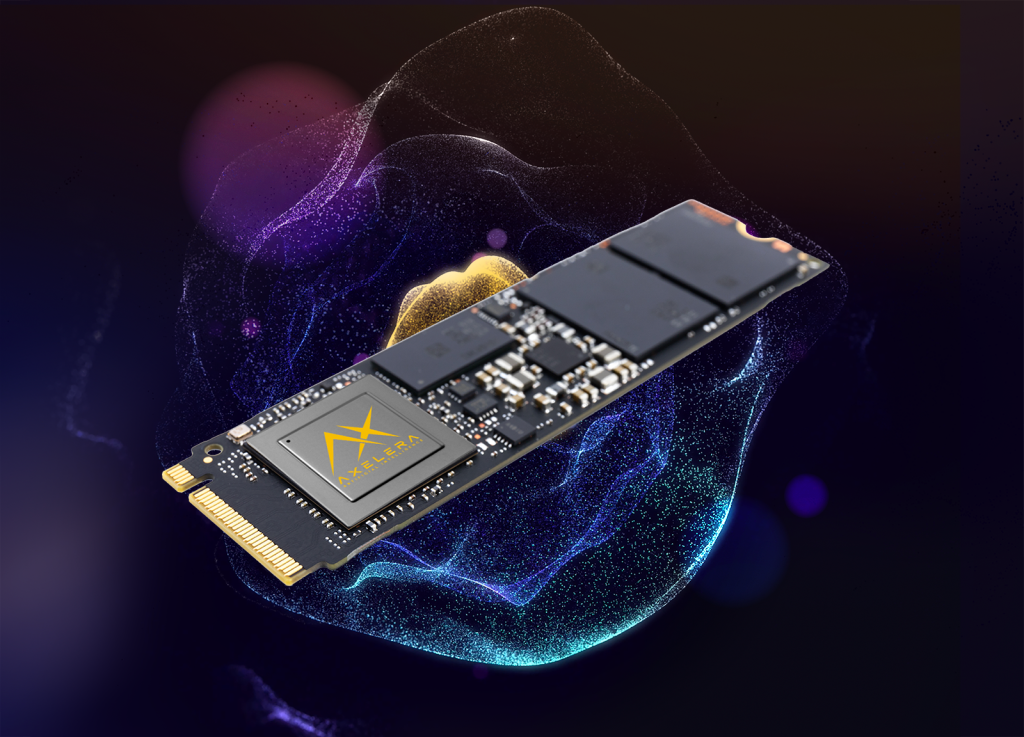

The M.2 AI Edge accelerator module (pictured) contains a single Metis AI processing unit (AIPU) chip while the PCIe AI Edge accelerator card contains four AIPUs.

The company says the Metis AI hardware and software platform is designed to accelerate computer vision at the edge through evaluation to development cycles.

The Metis AIPU is based on a quad core architecture. Each core can execute all layers of a standard neural network without external interactions delivering up to 53.5 TOPS of AI processing power for a compound throughput of 214 TOPS.

The cores can either be combined to boost throughput or operate independently on the same neural network to reduce latency. Another option is for it to process concurrently different neural networks for applications featuring pipelines of neural networks.

The SRAM-based D-IMC engine is described by the company as a “radically different approach to matrix vector multiplications” which make up 70-90% of all deep learning operations. “Crossbar arrays of memory devices are used to store a matrix and perform matrix-vector multiplications in parallel without intermediate movement of data,” explained Paul Neil, vice president of product management at Axcelera AI.

“This massively parallel “in-place-compute” contributes to the remarkable energy efficiency of the architecture,” he added. The engine accelerates matrix-vector multiplication operations, for an energy efficiency of 15 TOPs/W at INT8 precision. FP32 iso-accuracy is achieved without the need to retrain the neural network models, added Axelera AI.

Four cores are integrated into an SoC, comprising RISC-V controller, PCIe interface, LPDDR4X controller and a security complex connected via a high speed network on chip (NoC). The NoC also connects the cores to a multi-level shared memory hierarchy of more than 52Mibyte of on chip, high speed memories. The LPDDR4 controller connects to external memory which enables support for larger neural networks.

A PCIe interface provides a high speed link to an external host, which will offload full neural network applications to the Metis AIPU.

The PCIe card is powered by four Metis AIPUs and delivers up to 856 TOPs.

There is also a software stack, Voyager SDK (software development kit) which includes an integrated compiler, a runtime stack and optimisation tools that enable customers to import their own models.

Application templates speed up development; customers can choose from templates in the Model Zoo library of neural networks. Templates address common vision processing tasks, such as image classification, segmentation, object detection and tracking, optimised for deployment on the AIPU. The neural networks can be customised, fine-tuned or deployed ‘as-is’. Customers’ pre-trained neural networks can also be imported.

The Voyager SDK automatically quantises and compiles neural networks that have been trained on different frameworks, generating code which runs on the Metis AI platform. Optimised networks running on Metis AIPU are indistinguishable from those running on systems with floating-point units, said Axelera AI.

Typical application environments range from fully embedded use cases to distributed processing of multiple 4K video streams across networked devices.

“We are working with our development partners to integrate both the M.2 and PCIe card into gateway and server units to support camera networks of various sizes,” said Neil. “We’re also working with development partners to create an Edge Appliance integrating four PCIe cards to provide data centre class compute”.

The early access program opens today and the module and card will be available in early 2023.

The Metis platform will be exhibited at CES 2023 next month in Las Vegas, USA.