Nvidia’s RTX 40 Series laptop GPUs are here, but the raw horsepower of this card is less important than DLSS 3.0, which could allow the gaming laptop to kill the gaming desktop.

Why? Well through a little help from AI, it turns out that you can get frame rates up to 177% faster than without it — that is at the same graphical fidelity settings across the board. No longer do you have to do the dance of choosing which options to compromise on, in order to speed up that FPS count.

So, how does it work, what does it look like in practice, and how much faster do your games run with it? We’ve done the tests and have everything you need to know about DLSS 3.0 right here.

What is DLSS 3.0?

The third generation of Nvidia’s Deep Learning Super Sampling (DLSS) technology is using an AI-driven technique that is capable of boosting frame rates by an impressive amount (more on the specific numbers later).

What is it doing specifically in the background? Basically, it’s a spin on what you see in anti-aliasing in which the demand of game textures is reduced a little, but then artificially boosted by pumping it out at a higher resolution. In these finer margins, you can get away with a lot without gamers noticing any change to visual fidelity.

The additional thing being done comes in the form of the “Deep Learning” part of that name, which takes a lot of the pressure of rendering frames at a higher resolution off the GPU, and relies on AI to predict frames and deliver them. This is the end result of a Convolutional Neural Network (CNN) that has been relentlessly training supercomputers by showing them low resolution images and then the same picture at a 64x higher resolution.

Alongside this, the CNN is also providing temporal feedback, which helps the supercomputers to know and predict where an object in an image will move over time — resulting in a solid predictive loop to shore up the frame rate. The end result is a far smoother looking picture.

How it actually looks

So, just like any company, Nvidia has carefully chosen in-game pictures that make its product look good — almost as if you don’t notice the supersampling technique happening at all!

In reality, you can see it at differing levels based on what mode of DLSS you have on. Don’t get me wrong, it doesn’t impact the gameplay experience, but you should get an honest representation of what each mode does and make your own conclusions.

The quality mode is, as you’d expect, the one for those who prefer to maintain visual fidelity while boosting frame rate. In my side-by-sides, you’ll struggle to see any artifacting whatsoever, as the DLSS effect is very subtle.

For the best of both worlds, balanced mode has you covered. In this mode, you can start to see a little fuzzy outline around certain objects in the distance, but in the heat of gameplay, you’re not going to notice the effect working hard to boost that framerate.

And finally, if you want to prioritize a super fast frame rate, performance or ultra performance modes will achieve this, but at what cost? Going back to the bar scene, you can see a lot more artifacting around the NPCs and objects, as the neural network tries to determine where things are going. Not only that, you notice it makes an impact on certain ray traced elements too like the bloom of the arrow lighting.

Like I said up top, none of this impacts the gameplay of single-player titles like these — the slight additional latency can barely be felt and any high frame rate artifacting doesn’t hinder your visibility of what’s ahead. But it is worth knowing that while there are two extremes in DLSS 3.0, it may be worth going all Goldilocks and picking the “just right” balanced option.

DLSS 3.0 tested

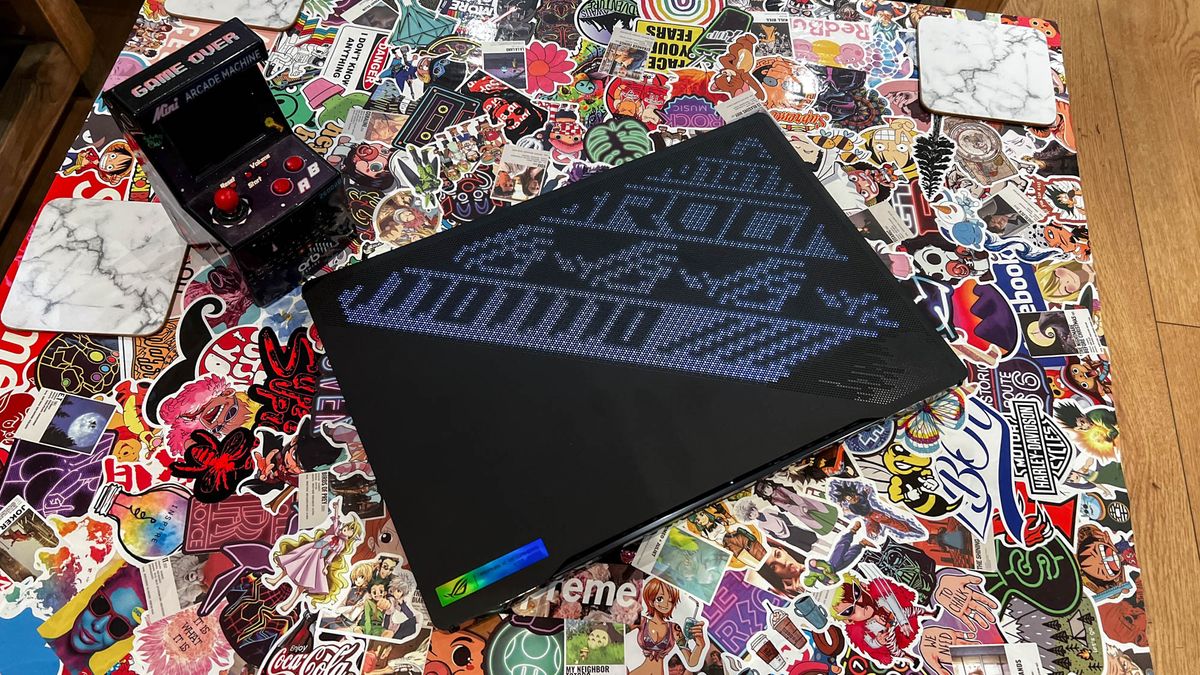

So we talked a big game. What’s the actual impact of DLSS 3.0? Our own tests indicate one very clear thing. Nvidia isn’t kidding around. This is quite the paradigm shift in PC gaming on the go. These numbers come from my own benchmarking on the RTX 4090-armed Asus ROG Zephyrus M16.

| Benchmark | Performance | Turbo | Turbo + DLSS Balanced | Turbo + DLSS Quality | Turbo + DLSS High Performance |

|---|---|---|---|---|---|

| Cyberpunk (2560 x 1600 Ultra RT) | 53.82 fps | 67.79 fps | 108.78 fps | 95.19 fps | 149.11 fps |

| Hitman 3 (Dartmoor 1920 x 1200 High/Ultra settings RT) | 49.47 fps | 60.69 fps | 129.46 fps | 124.07 fps | 134.06 fps |

| F1 22 (Miami 2560 x 1600 Highest settings) | 50 fps | 62 fps | 151 fps | 133 fps | 168 fps |

No, your eyes do not deceive you. That is an up to 177% increase in average frame rate compared to our out-the-box standard results, and a 119% jump over Turbo mode. That is utterly bonkers, especially when you take into account the pure power potential of the 4090 here.

Turns out that AI is not only the answer to making the most of that 240Hz display in the Zephyrus M16, it could also be the answer to making your gaming laptop the next great desktop replacement. With resolution upscaling and refresh rate increased through DLSS, you may not need to buy a massive tower that engulfs your desk anymore.

Outlook

It’s easy to misinterpret the importance of DLSS 3.0. Desktop gamers look upon it as the holy grail, as it unlocks AI resolution upscaling and refresh rate boosts unlike anything you’ve seen before.

But on an already lower resolution display of a gaming laptop, why do you need it? Well the answer is obvious — frame rate. To see Cyberpunk run so buttery smooth on a portable system is, frankly, mind blowing to me.

What will be most fascinating to see going forward is how this tech works on lower priced gaming laptop GPUs. Because sure, with the huge gains seen in the RTX 4090, supersampling is going to be simply glorious. But if Nvidia can pull off similar improvements in frame rate on lower end options too, then we’re in for a true game changer.