The search giant is collaborating with Everyday Robots to bring “together cutting‑edge, natural language research with robots that can learn”.

It’s the Palm-SayCan project (no, I don’t understand that wording) to help humans interact with companion robots.

Technically, it’s about robotic affordances and the best use of language to help control man-machine interactions. Basically, how to build a robotics algorithms that combine understanding of language models with the actual, real-world capabilities of a helper robot.

Technically, it’s about robotic affordances and the best use of language to help control man-machine interactions. Basically, how to build a robotics algorithms that combine understanding of language models with the actual, real-world capabilities of a helper robot.

How best to break down a simple procedure into easy to understand steps using appropriate language. A kind-of top-down stepwise refinement, it seems to me (remembering very early programming lessons, when coding languages were simply procedural).

Google writes:

“Robots struggle to understand complex instructions that require reasoning. By using an advanced language model, PaLM-SayCan splits prompts into sub-tasks for the helper robot to execute, one-by-one. This also makes it a lot easier for people to get help from robots – just ask using everyday language.”

For example, how best to execute a request such as ‘I’m tired. Bring me a snack that’ll give me some energy, please.’

Apparently, after a task has been requested, PaLM-SayCan uses “chain of thought prompting”, which interprets the instruction in order to score the likelihood that an individual skill makes progress towards completing the original “higher-level” request.

The challenge they face, they say, is that unlike typical robotic demos that can perform a single task in a constrained environment, they are asking an Everyday Robots helper robot for assistance in a real-world environment using reasoning.

Note that multiple safety layers do exist on the helper robot (including hardware safety layers, e‑stop, and move‑to‑contact behaviours).

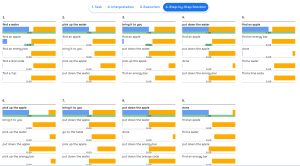

Example stages in decision making are shown below. It uses the Pathways Language Model (PaLM), which Google describes as “a 540-billion parameter, dense decoder-only Transformer model trained with the Pathways system.”

For more info you can visit this research website and this blog post on the Google AI blog, or see the video below.