It’s no secret that video games are inherently linked to the technology that allows us to experience them. However, as the industry continues to evolve, I can’t help but wonder if we’ve gone too far. It’s gotten to the point where graphical complexity is seen as the ultimate indicator of quality, making the game development process infinitely harder and longer. It seems like people will completely disregard an experience if the game’s graphics don’t hold up, or just absolutely demolish a game if it looks like it could have come out 10 years ago. To me, this seems absurd: Graphics are immensely overrated.

Of course, graphics aren’t actually “overrated” in a literal sense. They are the visual gateway into the video games we play. Without them, we’d have nothing to look at. But when I say graphics are overrated, I’m referring to the overwhelming focus video game communities and marketing puts on graphical prowess and modernity. Sometimes it feels like the quality of a game’s art and style is tossed aside just to analyze how “new” and “shiny” something looks.

Some genuinely believe that any game released more than a decade ago is “outdated and ugly,” and it truly makes me wonder, how did we get here? Why are people so obsessed with graphics?

The technology race

There’s plenty of reason why these advancements are such a fundamental part of the industry. Perhaps the single largest is because, at the end of the day, gaming is a technological medium: You need hardware to play the games.

No hardware company will survive selling you the same specs if games aren’t evolving hand-in-hand with tech. If I purchased an RTX 3080 and the industry decided that we’ve hit the roof in hardware requirements, with the RTX 3080 being the final piece of the puzzle, then what would Nvidia sell us?

Yes, they could continue manufacturing RTX 3080s. But whenever someone purchases one, they will have lost a customer for many many years, as you can make a GPU last a long time with proper care. When a PC gamer decides to upgrade their tech, it’s usually because their old tech is having trouble running modern software, not because it’s broken.

My Nvidia GeForce GTX 970, a graphics card that launched in 2014, still works. It’s a functioning GPU, but the reason I upgraded to an RTX 3080 was because I was sick of games moving on without me. If my GTX 970 ran every piece of software I threw at it perfectly, I wouldn’t need to upgrade. Essentially, Nvidia needs to innovate to sell new hardware. It’s how they turn profits and continue to be a financially successful company.

Console manufacturers have the same goal. How would PlayStation sell a PS5 to you if the hardware specs were the same as the PS4? What good reason could they have to convince you to move over? The only way they could do that is by forcing exclusivity on games, but in this imaginary world, that would be immensely problematic.

And of course, when the industry has that technology in front of them, they’re going to evolve their game engines accordingly. This is a technological medium and many of the brilliant engineers who work within it find themselves able to do more when better hardware is in their hands.

It’s also important to note how damaging this obsession with progress is to game developers and their work cycle. Games are getting more and more complicated to make with every passing year. It is currently harder to make games than it has ever been; development times are longer than they were 10 years ago and the amount of crunch we’ve seen developers endure to get the biggest titles released is alarming. Whether we’re talking about The Last of Us Part II or Cyberpunk 2077, so much overtime has been needed to put these enormous games together. How much worse do things need to get before we realize it’s not worth it?

And when the players themselves are so accustomed to this cycle, they’ll expect it and get upset when it doesn’t continue. It’s common for players to be critical of a lack of technological modernity. If character models, animations, textures, or other types of assets look remotely outdated, it will be pointed out and criticized. This is a trend I absolutely despise.

There’s more to art than just technology

The gaming industry’s obsession with graphical fidelity has only made me more aware of the flaws of technology. The more advanced a game tries to be, the more cognizant I am of how lacking it actually appears. The jarring contrast between an engine’s best looking moments and the parts that just don’t look right result in a rude awakening, making me realize how much progress the industry still needs to reach the goals it’s striving for.

For example, in Ghost of Tsushima, there are visual setbacks that make it harder for me to latch onto its world. Soaring through a gorgeous field of flowers on horseback is an inspiring sight, but when I stop next to a collection of rocks that look blurry or the sun is reflecting light against mud in an unrealistic manner, it quickly takes me away from that moment.

When a game attempts to mimic reality, my brain often processes the moments where it fails to look “realistic” with uncanniness. It just ends up seeming wrong, and whenever I think back to the game, my brain is hyper fixated on that flaw. On the other hand, my brain does not process older games in the same way, as there was never room for me to think of it as “realistic” to begin with.

There is very little potential to mistake Super Mario 64 as an attempt at realism. When I think back on it, I don’t process its technological flaws, because that technology was used to present a certain style that cannot be assumed to be something akin to reality. Your brain can process those “flaws” as artistic intent, whereas if you’re trying to render a realistic glass of milk, your brain will latch onto the flaws that clearly make it look different from what a bottle of milk should actually look like.

This isn’t to say modern games don’t have visual styles, of course they do. I’m specifically referring to how my brain processes it. And if you feel your brain is similar, then you probably have the same issue I do.

This also isn’t to say games look ugly nowadays. Obviously, modern games can look absolutely breathtaking, just as much as they could have twenty years ago. I simply do not believe in the notion that graphical complexity or modernity determines whether or not a game is visually compelling.

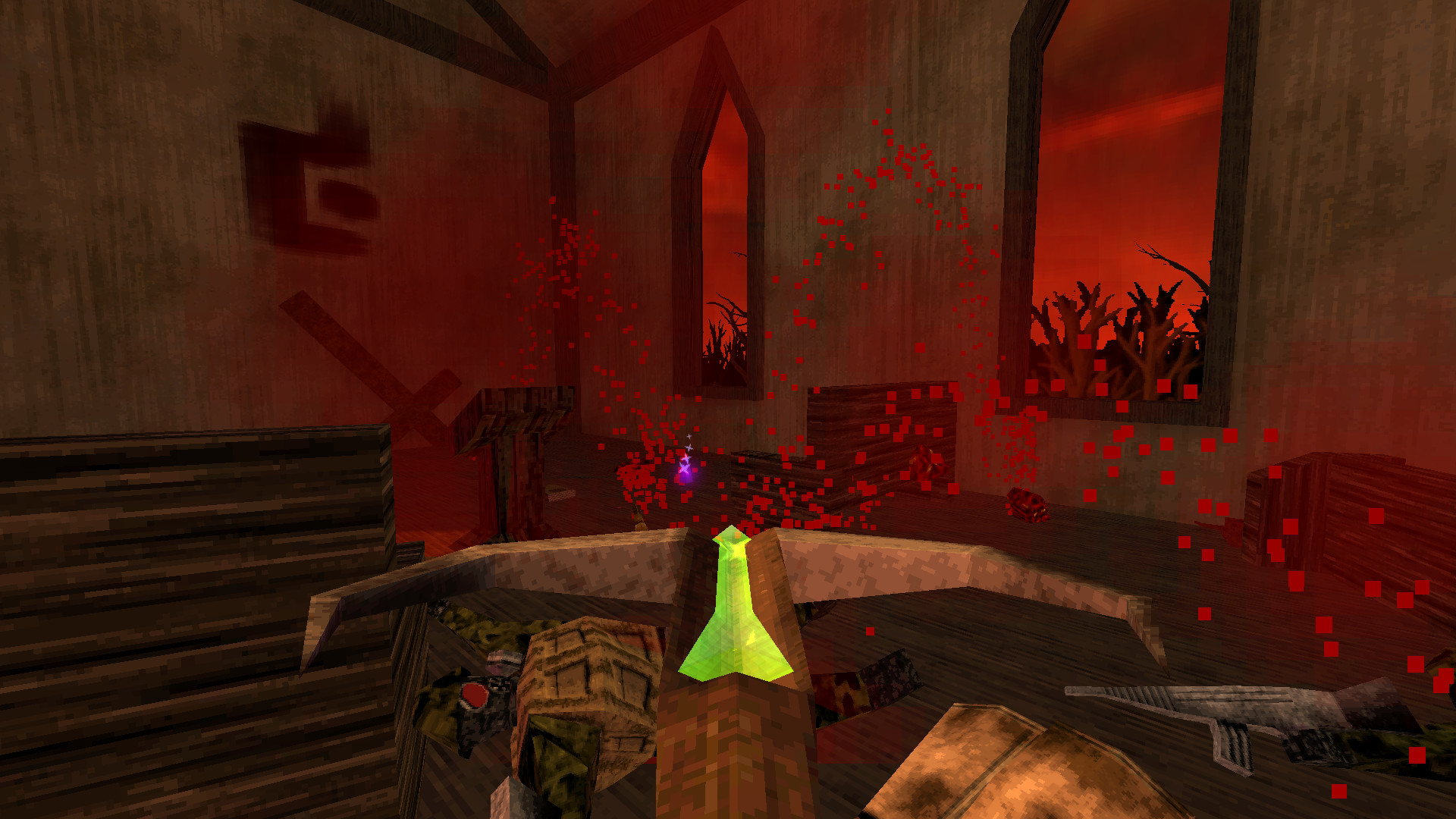

You don’t decide whether an illustration looks good based on the tools used to make it come to life; different tools merely offer alternate styles. Dusk utilizes a graphical style that mimics first-person-shooters from the late 1990s, and that style has no bearing on the quality of the art itself. That style is merely a tool to express the intentions of the artist.

This isn’t to say you can’t have preferences with style. Everyone does. I cannot blame someone for preferring the modern, realistic look of AAA games launched in the last few years. It’s a perfectly understandable preference to have, but it’s important to keep in mind that a game doesn’t look good just because it has high quality textures. There is so much more to the artform than that, and I wish people allowed games more leeway when they’re not as graphically complex as others.

Elden Ring, an experience that boasts some of the most breathtaking visual setpieces in gaming this year, was (and still is) frequently criticized for its outdated graphics by players who believe technology is the end all be all in determining the quality of visuals. Yes, Elden Ring suffered from pop-in and its foliage and textures look nowhere near as advanced as Demon’s Souls (2020). But I genuinely believe Demon’s Souls 2009 looks infinitely better than its remake, yet that game came out 13 years ago.

In fact, the best looking game ever made was and still is 2005’s Shadow of the Colossus. Technological prowess can’t make up for a vapid aesthetic or a world lacking inspiration. There is so much more to art than just progress, and the industry needs to reconsider this gluttonous obsession.

Bottom line

At what point do we realize that constant advancement is not worth it? How much longer do development times need to get? How much harder does it need to be to create a game? AAA development is a ticking time bomb waiting to blow, and as the industry continues its obsession with progress, the artform will suffer.

I hope I’m wrong. But I truly do believe that this cycle has a time limit. There will be a point where expectations are so unbelievably high that it’s not financially viable to continue making games of that complexity anymore.